A new Slack thread opened in the support channel.

I haven’t received any dependency updates in 2 months. What’s going on?

The pit in my stomach sank straight to the floor.

We have a system that opens pull requests for dependency updates to reduce the burden of maintenance. To generate the update, it must run a Gradle task successfully. There will be no update if the task fails. Users could know it failed only by looking at a page or noticing the absence of updates.

We had to do better. We prioritized notifications.

The Challenge

It’s important to only send notifications that require action.

Dependency updates must complete five phases before a pull request is opened:

- Launch container

- Clone repository

- Bootstrap Gradle

- Run update task

- Commit/Push

Each step can fail intermittently or consistently with surprisingly similar output.

Consider the bootstrapping phase failed because we could not resolve a plugin. If the artifact server had a hiccup during resolution this may be intermittent. If the version was checked in with a typo, it requires remediation. In both cases the error message will be about a plugin failing to resolve.

We need to be able to tell the difference between intermittent and consistent failures. This categorization did not already exist, and was likely to the be source of bugs. Thousands of repositories have automated dependency updates. It was clear there were going to be a lot of unpredictable edge cases.

To create high-quality notifications, I needed a development workflow fit for purpose.

Onboarding

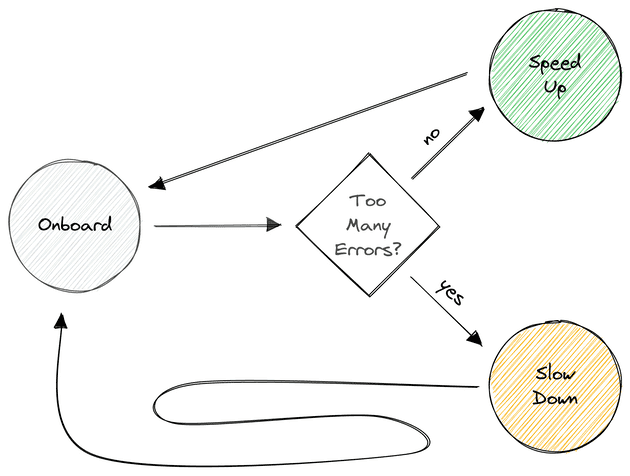

The first part of the workflow design was how fast users would onboard. My strategy was to gradually expand:

- My test repositories

- My team’s repositories

- Early adopters

- Everyone

The speed of onboarding would be determined by how well it was going.

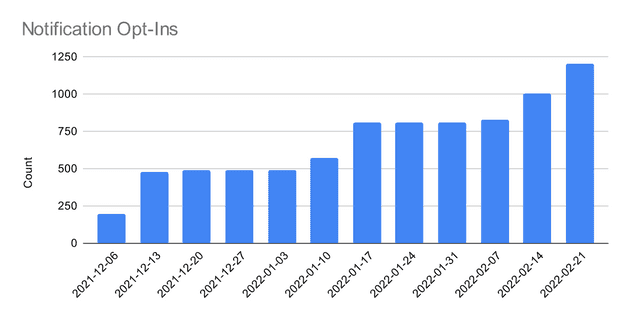

Progress was steady, save for the typical lull at the end of the year. Every week was either about improving the signal or new folks opting-in.

Error Signal

In this model we need an error signal. It’s possible to use bug reports or support requests for this, but I didn’t like these options. That pit in my stomach wasn’t going to feel any better if I shoved that burden onto users.

I chose to create a simple approval process.

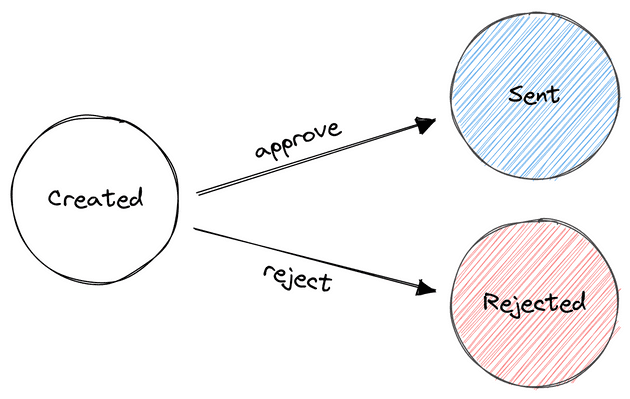

Notifications started in the Created state, and they could transition to Sent or Rejected.

When an issue arrived I would reject the notification, deploy a fix, and generate a new notification.

Approving would serve as low-risk way to detect errors from real-world data. I would have the first look at every issue going through the system. I knew I had something here, because I suddenly liked my chances of creating a high-quality product.

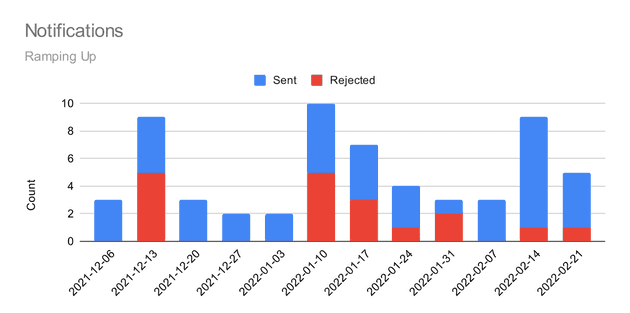

31 notifications were rejected during the ramp-up phase. Most of these issues were fairly small oversights, but they would have made notifications untrustworthy from the start.

Another approach would have been to notify everyone on day one. This would have resulted in triaging 100+ notifications and ending up with 31+ failures to deal with. Being bombarded with many errors at once often means at least some errors go unaddressed.

Onboarding based on an error-signal never left me with more than a few issues to focus on. By having just a few issues, it was easy to solve them all. It took a bit longer for everyone to be notified, but the content was higher-quality.

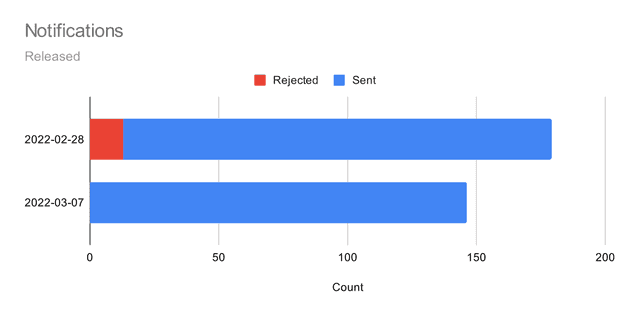

Contrasting the ramp-up chart with the opt-ins, it’s easy to see how the error signal played an important role. New folks were not opted-in while issues persisted. As issues were fixed, we started to opt-in faster.

This chart ends on February 21 because at this point I had enough confidence to open this up for everyone else.

The first run through with everyone saw 13 rejections - cases absent from early adopters. Issues were fixed within the week. The next round went out without a single issue needing to be rejected. It was time to auto-approve.

Only one bug report since.